|

|

Robotics projects

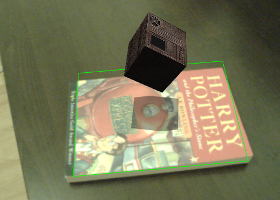

Autonomous navigation with LIDAR (2010)

|

This project provided mapping and trajectory planning features to the |  |

Self localization and mapping (2011)

|

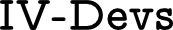

Integration of a then state-of-the-art self localization and mapping algorithm into a robotic suite for Awabot's Emox robotic suite. Emox is an educational package built around the Emox/Sparx robotic platform. It includes modules to create augmented reality, programming and navigation applications. IV-devs' role was to create a simultaneous localization and mapping module using the PTAMM algorithm. This allowed autonomous navigation and extended greatly the robot's capacities. |  |

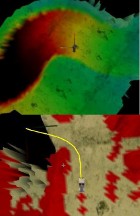

Crowd counting using HD video streams (2011)

The goal of this open source project was to evaluate the number of persons in a crowd. There was at the time no fully automated software for this task. HeadCounter uses a Haar classifier to find faces in a crowd and tracks them individually to evaluate the rate of the flow and the total size of the crowd. HeadCounter is available on Github |

Vision and navigation in elevators for a Fetch robot (2017)

Vision and movement logic for a Cobotta arm robot (2019)

Fae-bot: an agriculture automation experiment (on-going)

|

The goal of this project is to explore the usability of suspended robots in agriculture automation. Suspended robots offer a compromize between wheeled robots and multicopters as well as being potentially more affordable than either option. This project led to a colaboration with Machine Learning Tokyo, who subsequently published a paper about a deeplearning control model for suspended robots (paper). |